This week’s picks lean into bigger-picture thinking—smart reads, a provocative listen, and some original thoughts from me at the end on why healthcare resists bold change.

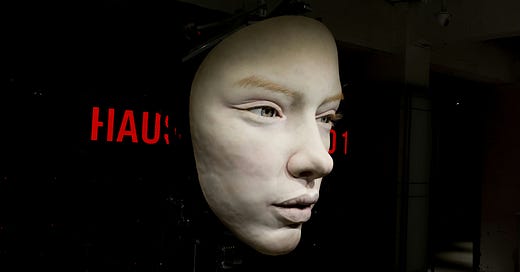

New on Freerange MD — The rise of digital intimacy

In this episode of Freerange MD, I spoke with James O’Donnell of MIT Technology Review about the surge in AI companions — chatbots designed to meet your every emotional, intellectual, or sexual need. Gen-Z isn’t just flirting with this tech — they’re forming relationships with it.

This is a short but unsettling episode. Give it a listen — and if it hits you, a quick rating or follow really helps spread the word.

James’ original Tech Review piece is here. He’s a great writer and a super smart conversationalist.

Don’t bring your whole self to the hospital

Outstanding opinion piece in the New York Times by a Harvard computer science professor pushes back on the growing expectation that every corner of higher education—and by extension, every professional identity—must be politicized.

All academics are experts on narrow topics. Even when they intersect with the real world, our expertise in the facts does not give us authority over politics. Scientific research shows that vaccines work and climate change is real, but it cannot dictate whether vaccines should be mandated or fossil fuels restricted.

The same tension exists in medicine. The editorial sparked plenty of debate on X, especially among physicians, split between libertarian-leaning voices and those who believe doctors have a duty to take up sociopolitical causes. For some, advocacy has become a central professional identity. But while patients may hold strong views, most come to us to get better—not to have their ideology affirmed.

I see a middle ground but feel that since COVID medicine has become overly politicized.

Treat AI like electricity, not uranium

Despite all the hype—talk of superintelligence, AI rights, and comparisons to nuclear weapons—two Princeton researchers are urging us to treat AI like a normal technology. In a provocative essay in MIT Tech Review, Arvind Narayanan and Sayash Kapoor argue that AI’s real impact will unfold slowly, like electricity or the internet—not in a sudden, society-upending wave. They challenge loaded terms like “superintelligence” and instead suggest focusing on AI’s potential to worsen existing societal problems like inequality and misinformation. The real risk, they say, isn’t robot overlords—it’s AI quietly deepening the challenges we already face. Their advice? Skip the sci-fi panic and focus on strengthening democratic institutions, boosting technical literacy in government, and using AI wisely. In short: less drama, more governance.

Is this the start of a new Dark Age?

We usually think of the “Dark Ages” as something from the distant past—plagues, superstition, lost knowledge. But this powerful piece in Quillette suggests that we might be entering a new version of it today, not because of war or disease, but because of tech. We’re drowning in information, but it’s harder than ever to agree on what’s true. Institutions are losing trust, attention has become a commodity, and tech giants profit by keeping us distracted and divided. The old systems—media, democracy, even how we think—are showing cracks. Maybe the smartest thing we can do now isn’t to scroll harder, but to unplug, tune out the noise, and reclaim a little peace and perspective.

…the new dark age—the age of cacophonous technology, of information overload, of toxic clamour and attention-fuelled barbarism—may end when we retreat into silence.

Overheard on LinkedIn

If your doctor hands out GLP-1s without a full medical assessment, you don’t have a physician — you have a GLP-1 drug dealer. — Michael Albert, MD

Healthcare is lousy at the big stuff

This is loosely transcribed from street commentary I recorded in Austin’s Domain this weekend. I’ll post it to the FreerangeMD YouTube channel later today

Healthcare’s really good at small change.

We’re brilliant at gradual improvement. In fact, we’re obsessed with incrementalism — slow, steady tweaks. And for really good reason: Small shifts in equipment sterilization or pre-op timeouts, for example, can save lives.

In fact, we’ve built a system obsessed with quality improvement. And the results? Life changing. But there’s a big difference between looking immediately back to improve quality and looking forward to decide what should happen next.

And while we’re focused with perfecting the small stuff, we’re avoiding the big leaps. The kind of change that reimagines how care is delivered. Changes that challenge the WHOLE system — not just the parts.

This is because healthcare’s risk-averse by nature. These bold moves just feel like too much for most of us. Too risky. Too fast.

It’s interesting that during the 20th century, progress in healthcare came from inside the big institutions — Academicians working in legacy hospitals in places like Boston, Baltimore and Philly; tinkering in labs and pushing things forward.

But now? Change in medicine and healthcare is coming from the edge. From startups. From technologists. From people outside the traditional system willing to bend the rules that we won’t. And they’re not bound by tradition or existing processes, and they’re not slowing down. So the question isn’t if change is coming — it’s how we adopt it and partner with it to move the chains.

Of course, small change keeps us going. But transformational change? That’s what’s going to fix the biggest problems we’re facing in healthcare. What are you doing to help with the big steps?

Thanks for reading this far. Again, please pass this along to someone who might like it.