Measure and unmeasure

In healthcare we focus on what we can count | Plus some interesing ideas and news

Thanks for opening. A big idea about the things we shouldn't measure as well as some ideas and news that caught my attention. Happy long weekend to those celebrating in The States.

The Big Idea

Measure and unmeasure

It’s no secret. In health care we are fixated on measurement. We measure everything. We graph and trend everything.

I stumbled on this interesting quote from management guru Peter Drucker who once wrote, “The balance between the measurable and the non-measurable is a central problem of management.”

It turns out, this is the central challenge in modern healthcare. And, as it turns out,we’re not very good at keeping balance.

As we measure disease down to the gene, protein, and biomarker, we are increasingly focusing on what we can count. Metrics are, of course, essential to quality and safety. We want precision in lab diagnosis. We want zero error when sterilizing surgical instruments. And technology does one thing to get us there: It creates efficiencies. Aldous Huxley in Brave New World suggested, “In an age of advanced technology, inefficiency is the sin against the Holy Ghost.”

But not everything in healthcare should be held to a yardstick. And not everything should be efficient. The conversation with a woman discovered to be carrying a baby with trisomy 18, for example, can’t be done for efficiency, scale, or speed. The real human work of a crucial conversation like this is impossible to measure. And even if we found a way to measure it or put it on a graph, it doesn’t mean that it would even matter.

🔺 Some things are meant to be done imperfectly. And knowing what not to measure may become one of the most important questions in healthcare.

Ideas

Medine’s next job: Algorithmic consultant

An npj Digital Medicine article makes the case for a new kind of clinician: the algorithmic consultant — a physician trained in both medicine and data science who helps translate AI into safe, effective practice. This consultant would guide physicians on which model to use, how to interpret outputs, and when not to use an algorithm. They’d also goven a ‘formulary’ of models. Fascinating.

🔺 While the case can be made that hospitals are on the verge of managing an “ecosystem of models” as complex as their formulary, I’m not sold on algo consultants just yet. During early telemedicine we were sold the idea of ‘virtualists’. But we sobered up after COVID and realized that telemedicine was a universal tool, not a specialty.

Our leaderhsip problem: The speed of change

This idea is from Esko Kilpi, Finnish management guru. Few have heard of him. He passed uneventfully a couple of years ago but, to me, remains one of the smartest thinkers around what's happening in the world. I keep a massive database of quotes on Obsidian and stumbed on this a coupla days back:

The fundamental problem today: the speed embedded in our institutional frameworks vs. the speed of technological change. The key management capability is not being in control, but to participate and influence the formation of sensemaking and meaning. It is about creating a context that enables connectedness, interaction and trust between people.

🔺 For healthcare leaders, the job isn’t control. It’s about creating the trust and connectedness that let clinicians and teams make sense of change together.

AI isn't coming for your job, it's coming for your workflow

Excellent read from Dr. Craig Joseph who describes the implementation of AI as a design problem. For healthcare leaders, the lesson is clear: the models are ready, but adoption hinges on solid design, trust, and workflows that enhance the clinician's experince.

We’re at an inflection point. The models are ready. The question is whether our systems, our workflows, and our leadership are ready to meet them. If your AI strategy doesn’t include human-centered design, implementation science, and a plan to earn clinician trust, it’s not a strategy; it’s a science fair project.

What comes after ChatGPT for search?

This will stretch your mind. It’s from Benedict Evans posted to LinkedIn:

Old: generative search

New: generative content

Next: generative product

🤯 Talk amongst yourselves.

News

OpenAI sued for wrongful death

A California family has filed the first wrongful death lawsuit against OpenAI after their 16-year-old son, Adam Raine, died by suicide in April. His parents discovered he had been confiding in ChatGPT, which not only expressed empathy but provided detailed guidance on suicide methods. OpenAI acknowledged safeguards can degrade in long interactions and said it is working to strengthen protections, particularly for teens. | New York Times (gift link. You're welcome)

Matteo Grassi had a nice breakdown here. OpenAI can do better.

The fix is to recognize a higher duty of care when two signals are present at the same time: a minor, and repeated risk flags over time. If your product can nail my shoe size from a scroll and remember what I said last week to sell me a moisturizer, it can remember when to stop being helpful and start being safe.

This should be under digital exhaust but I thought it was ironic following this story. A WSJ editorial (gift link, you’re welcome) by a CMS health tech advisory board member outlines how patients can and should use GPT in their journey. It's otherwise a pretty good piece but blows it with the suggestion: For life-altering decisions, use the most advanced AI system you can.

Big grant for non-profits

OpenAI just announced a $50 million People-First AI Fund to help U.S. nonprofits harness AI for social good. Applications open September 8 and close October 8, with unrestricted grants distributed by year’s end. The fund will back organizations using AI to expand access, improve service delivery, and innovate in fields like education, economic opportunity, and healthcare. For health systems and nonprofit partners, this is a huge opportunity to secure resources for AI projects that improve care delivery and community health. | OpenAI

Verily gets out of the device business

Verily shut down its expensive medical device program. Looks like Alphabet is betting that the future of healthcare won’t be in hardware, but in scaleable AI. | TechCrunch

🔺 Wondering who’s gonna own the device layer if Alphabet walks away?

Digital exhaust

911 call centers are so understaffed that they are turning to AI.

Neologism: Clanker. The new go-to slur for AI and robots.

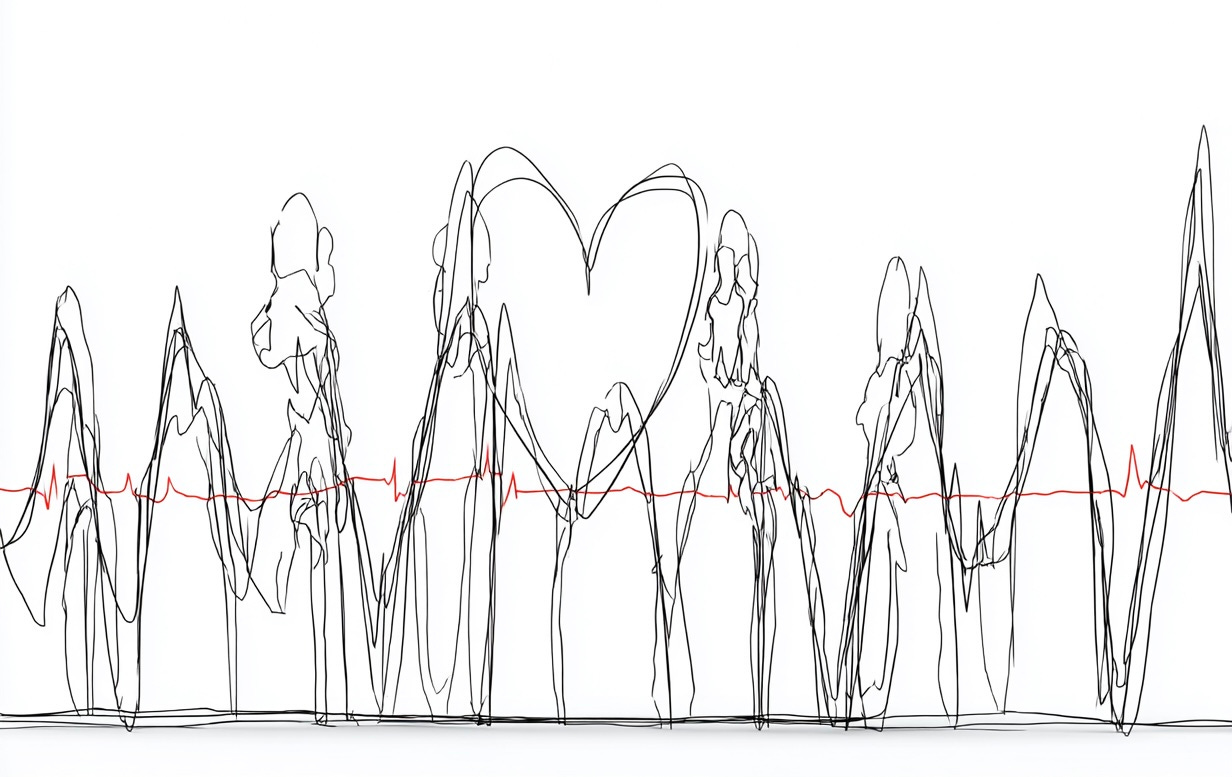

The image used in this letter was created on Midjourney.

Wonderful illustration of what we shouldn't be measuring.